USE CASE: Using swarms of mobile robots in smart factories

As part of the SmartEdge project, we are developing swarms of autonomous mobile robots and smart edge devices that can adapt themselves to dynamically changing environments while collaborating with each other to achieve common goals.

To realise the circular economy and promote local manufacturing, we need to build smarter, more adaptable factories which can produce a broader range of products in smaller batch sizes, i.e., custom products on demand. Local smart factories reduce transportation CO2 emissions and provide shorter more robust supply chains.

Additionally, by producing products locally where they are consumed, components can be refurbished and recycled directly back into the manufacturing process, further reducing waste. Unlike large conventional factories, which have static production lines that may remain unchanged for years, smart factories need to be dynamic and flexible with little or no fixed infrastructure. Recent advances in autonomous mobile robots [1] and robotic flexible assembly cells [2] have shown how smart factories can be implemented to create these new manufacturing environments.

SmartEdge factories will show how centralised fixed manufacturing systems can evolve into swarms of autonomous mobile robots, communicating and collaborating at the edge, with minimal supervision by humans or central control systems.

Enabling Swarming Collaboration Among Intelligent Robots and Edge Devices

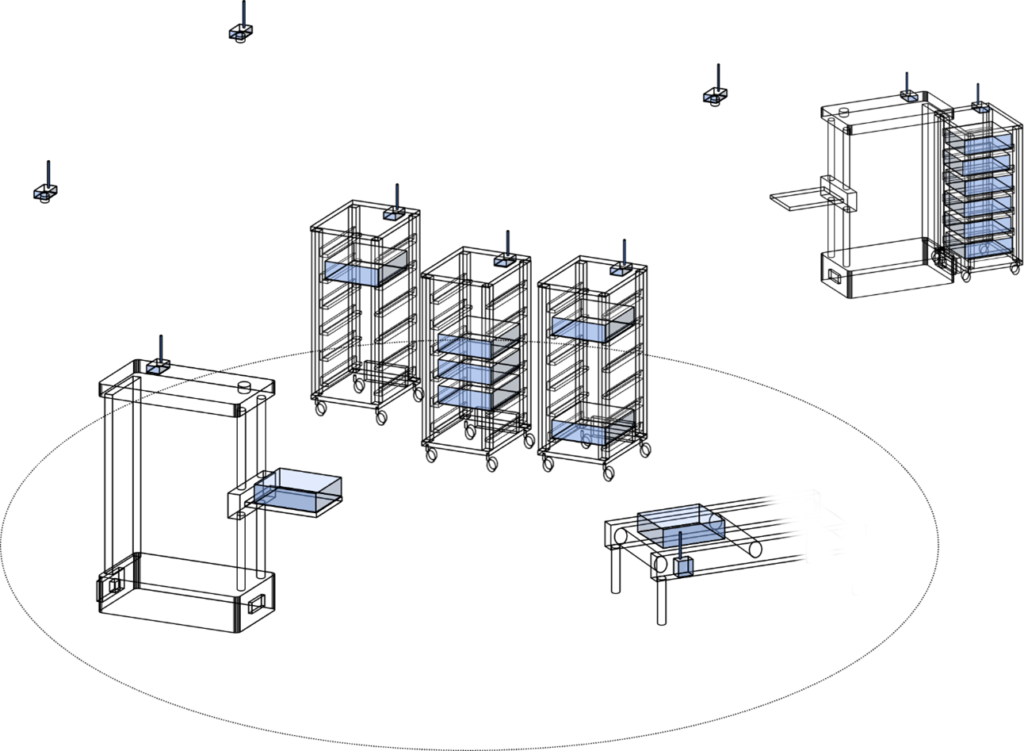

Figure 1: A swarm of autonomous mobile robots and smart edge devices

Autonomous mobile robots have been utilised for many years to move products and material around the factory floor. They are more flexible than fixed conveyor belts, but are still often guided by static control systems that follow fixed paths. Whilst there is some interaction with other devices and machinery in the factory, the communication is typically bespoke and centrally managed. To achieve the next level in the development of smart factories, the intelligent robots and other smart edge devices will not only need to perceive their dynamically changing environment, but also understand it by continually updating their internal semantic and physical models of the factory floor.

By exchanging high-level semantic events and sharing parts of their internal models, intelligent robots and smart edge devices can build a common semantic understanding of their world, enabling them to form swarms and collaborate to perform common goals.

Figure 1 illustrates a swarm of intelligent robots and smart edge devices moving products between production cells. Looking down from above are ceiling cameras that share their model of the world as a semantic stream of information. Unlike typical robotic manufacturing facilities, which require highly predictable and repeatable environments, the autonomous mobile robots and smart edge devices must be able to operate in a dynamic and somewhat unpredictable world, where things are not exactly were they were left, and unexpected obstacles must be circumvented.

Semantic SLAM: Enhancing Environmental Understanding and Reasoning

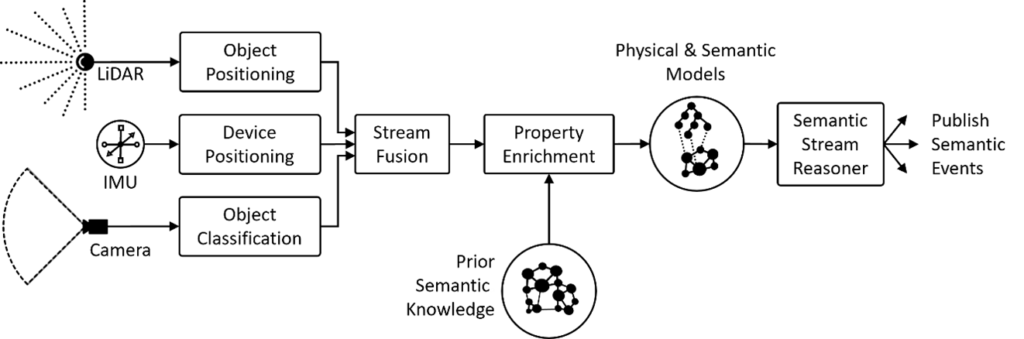

Figure 2: Semantic SLAM processing pipeline

Simultaneous Localization And Mapping (SLAM) [3] is a well-known technique, which allows an autonomous vehicle or mobile robot to build a map of its environment whilst maintaining its position and pose within the map. The SLAM map represents walls and other objects as boundaries, but there is no indication of what those boundaries represent, i.e., the SLAM map only partially describes the environment, as there is no higher-level comprehension of the objects in the map.

SmartEdge extends this concept using a new technique called semantic SLAM [4], which combines raw data streams from different types of sensors to perform semantic segmentation, as illustrated in Figure 2. Semantic SLAM allows objects to be classified and positioned in 3D space at the same time. By assigning prior knowledge based on the classification, it is possible to associate properties to identified objects, such as their typical weight, whether they are moveable, etc. In this way the semantic SLAM map provides the autonomous mobile robot with a more complete understanding of its environment in the form of interlinked physical and semantic models. Using these models, the intelligent robot can reason about its environment and the appropriate behaviour to perform in a given scenario.

The Role of Swarms in Smart Factory Environments

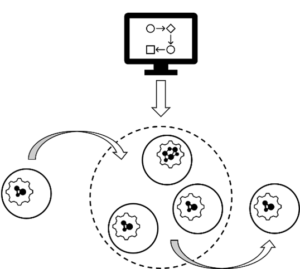

Figure 3: Swarm formation

By forming swarms, sharing parts of their internal models, and exchanging high-level semantic events, autonomous robots and smart edge devices can collectively better understand their environment and collaborate on collective tasks. For example, part of a robot’s operational area may be occluded behind an obstacle but is visible to other smart devices in the swarm; by exchanging parts of their models, members of the swarm can fill in the missing knowledge of their environment.

Figure 3 illustrates how a swarm can be formed in the smart factory scenario. Initially, a task is defined in the form of a SmartEdge recipe, which specifies the capabilities and steps necessary to perform the task. The recipe is downloaded to a smart seed device that starts to form the swarm. The seed device assesses the missing swarm capabilities to perform the required steps and co-opts available autonomous mobile robots or smart edge devices into the swarm. When a device is no longer required it is free to leave and join a new swarm where it is needed.

In conclusion, the smart factory use case demonstrates how autonomous mobile robots and smart edge devices can leverage the SmartEdge toolchain. This will allow them to understand their environment by using common high-level semantics, form swarms to perform tasks, and exchange knowledge in order to operate in the dynamic and uncertain environments of future smart factories.

Author: David Bowden (Dell Technologies)

References:

[1] Schneier, M. and Bostelman, R., 2015. Literature review of mobile robots for manufacturing (p. 21). Gaithersburg, MD, USA: US Department of Commerce, National Institute of Standards and Technology.

[2] Abd KK. Intelligent scheduling of robotic flexible assembly cells. Springer; 2015 Nov 8.

[3] Taheri H, Xia ZC. SLAM; definition and evolution. Engineering Applications of Artificial Intelligence. 2021 Jan 1;97:104032.

[4] Nguyen-Duc M, Le-Tuan A, Hauswirth M, Bowden D, Le-Phuoc D. SemRob: Towards Semantic Stream Reasoning for Robotic Operating Systems. arXiv preprint arXiv:2201.11625. 2022 Jan 27.